Why Back up Database SQL Server to Amazon S3

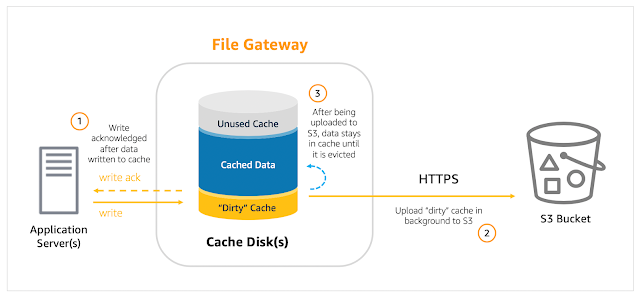

In the prevailing business scenario, there are several benefits of backing up Microsoft SQL databases to the cloud like Amazon Web Services (AWS). It not only makes for easy restoration of databases using a cloud-based server but also offers almost unlimited storage space in the cloud. Apart from this aspect, why do organizations want to back up databases from Microsoft SQLServer to S3 , a platform that operates in the cloud? Benefits of SQL Server to S3 The first is affordable storage options. Amazon S3 provides access to various levels of data storage at proportionate rates. With S3 Storage Class Analysis, you can discover data that can be loaded to low or high-cost storage sections as per requirements. Next, after SQL Server to S3, you get excellent data scalability and durability. It is possible to scale up or down in storage resources by paying only for the quantum used. This takes care of fluctuating demands of storage without investing additionally in resource procurement cycl...