Improving Data Access and Performance with Snowflake Data Lake

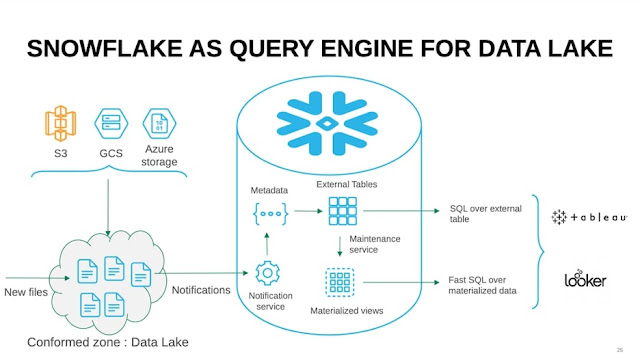

Data lakes are a form of structure that can store high volumes of ingested data to be processed and analyzed later. The concept of data today is no longer about different systems like data marts and legacy data warehouses. The introduction of Snowflake Data Lake has changed the complete data landscape by eliminating the need for developing, deploying, and maintaining different data storage systems. For the first time, a single enterprise-level cloud data platform can carry out seamless management of structured and semi-structured data like tables and JSON in an all-inclusive way.

TheSnowflake

data lake has an extendable data architecture that ensures fast data

movement within a specific SAcloud-based environment. Data is generated via

Kafka or another pipeline and persisted into a cloud bucket. From the bucket, a

transformation mode and engine like Apache Spark transform the data into a

columnar format like Parquet. It is then loaded into a conformed data zone. The

advantage here is that businesses do not have to choose between a data lake and

a data warehouse any longer.

The Snowflake data lake has a single-point data storage where huge volumes of structured and semi-structured data like CSV, JSON, ORC, and tables can be easily stored without the need for separate silos. Next, the data lake has flexible computing resources that change according to the workload and the number of users without affecting running queries or any drop in performance. Similarly, Snowflake data lake provides flexible and affordable data storage resources, and only the base cost of Snowflake cloud providers like Amazon S3, Google Cloud, and Microsoft Azure is to be paid by the users.

Comments

Post a Comment